AppDat’s DevSecOps Platform #

AppDat provides a fully managed Source Code Management (SCM) and DevSecOps platform with a self-hosted instance of Gitlab Ultimate launch . As described by Gitlab, the AppDat DevSecOps platform allows organizations to deliver software faster, while strengthening security and compliance, thereby maximizing the return on software development.

AppDat provides Gitlab as both a standalone service and also has an integrated component of the AppDat platform’s Kubernetes based application hosting platform services for providing AppDat tenant’s a fully managed end-to-end DevSecOps enabled continuous delivery framework.

Learn more about the DevSecOps solutions provided by Gitlab launch

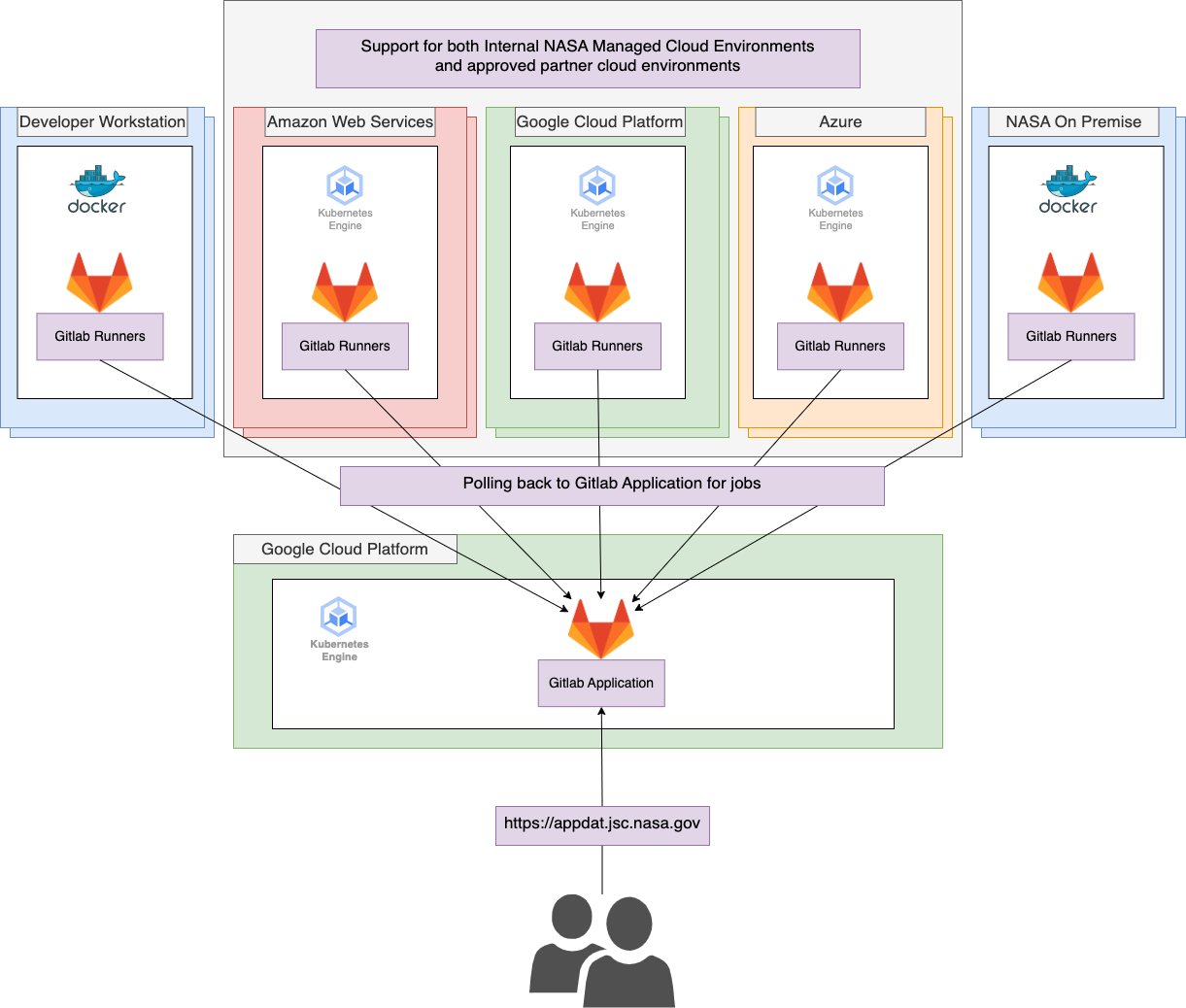

CI/CD Runner Architecture #

AppDat runs all aspects of the Gitlab Application and the “shared” CI/CD runners on Kubernetes. The application is operated in isolation within a dedicated Kubernetes cluster, then AppDat operates a set of “shared runners” that are provided by default to all users of the AppDat DevSecOps Platform which are also run in their own dedicated Kubernetes clusters.

The CI/CD runners “poll” for jobs from the Gitlab application, making it extremely easy to run Gitlab Runners within different environments without the need to update any firewall rules or permit inbound traffic. This allows AppDat Tenants to deploy Gitlab Runners within any environment including their local developer workstations.

AppDat DevSecOps Templates #

AppDat has developed a set of reusable, general purpose, and extensible CI/CD templates that are available to all AppDat DevSecOps Platform Tenants. These templates are versioned, and provide the core CI stages and jobs that support most all use cases.

There are several different templates available and the functionality is continuously being improved and expanded. At a high level however these templates support:

- Build: The building of source code into a tagged Docker Container Image along with the “push” of that image into the appropriate Gitlab Container Image Registry launch

- Test: The test stage is a series of security scanning jobs that prefer a variety of security and compliance scans against the source code.

- Versioning: The versioning of source code with the industry standard: Semantic Versioning launch

- Deploy: The deploy stage is available to AppDat container hosting tenants, these jobs manage the automated deployment of source code into the AppDat managed Kubernetes environments.

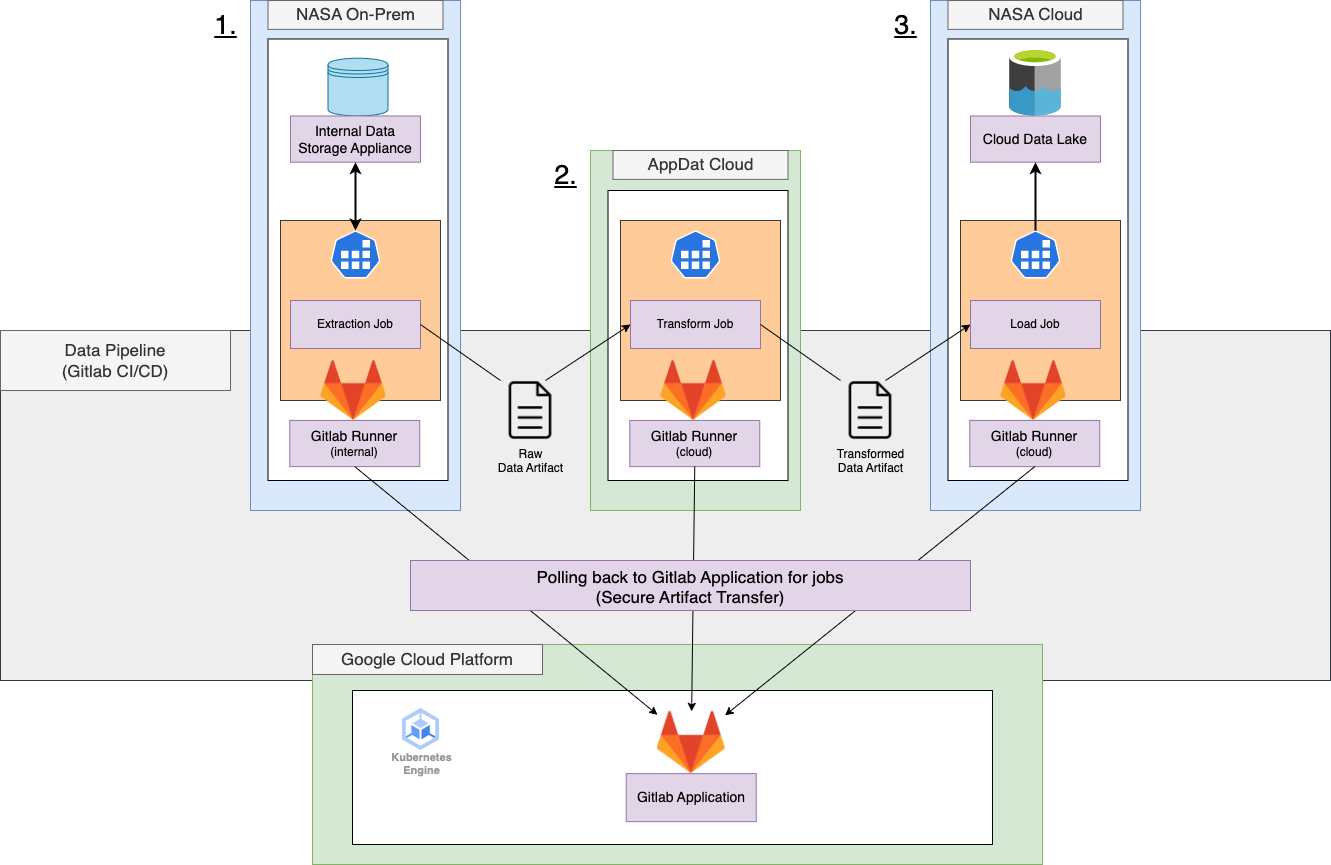

AppDat Gitlab for Data Pipelines #

One largely unknown use case that is well supported by the Gitlab CI/CD infrastructure, is using the CI/CD pipelines to perform data operations such as data extraction, transformation, and loading (ETL) into a data lake or data warehouse solution.

There are several key enabling facets of Gitlab CI/CD infrastructure in support of data engineering and data pipelines:

- Scheduling & Triggers - Pipeline can be run on schedules or on-demand via programmatic trigger (API)

- Container Based Jobs - Jobs are executed within a user-specified docker image, so most all technologies and languages are available

- Localized Gitlab Runner Usage - Jobs can target specific Gitlab Runners being operated inside local network boundaries near existing databases.

- Job Polling - Jobs “poll” for their activities from the main application, so there is no inbound firewall request needed

- Artifact output/input - Jobs can pass data “artifacts” between jobs, including jobs that are running in completely different networking zones.

The above pipeline could look something like the following in the gitlab-ci.yml file

stages:

- extract

- transform

- load

extract-on-premise-data:

stage: extract

image: python:3.7

tags:

- internal-data-center-runner

script:

- pip install

- python extract.py # performs extract and writes to data.csv

artifacts:

paths:

- data.csv

transform:

stage: transform

image: node:alpine

script:

- npm install

- node transform.js data.csv # takes data.csv input and outputs transform JSON file

artifacts:

paths:

- data.json

load:

stage: load

tags:

- data-lake-adjacent-runner

image: gsutils

script:

- export GOOGLE_APPLICATION_CREDENTIALS=$GCLOUD_SERVICE_KEY # security access key securely provided in CI/CD Variable

- gcloud storage cp data.json gs://my-data-lake-bucket/ # loads file into bucket